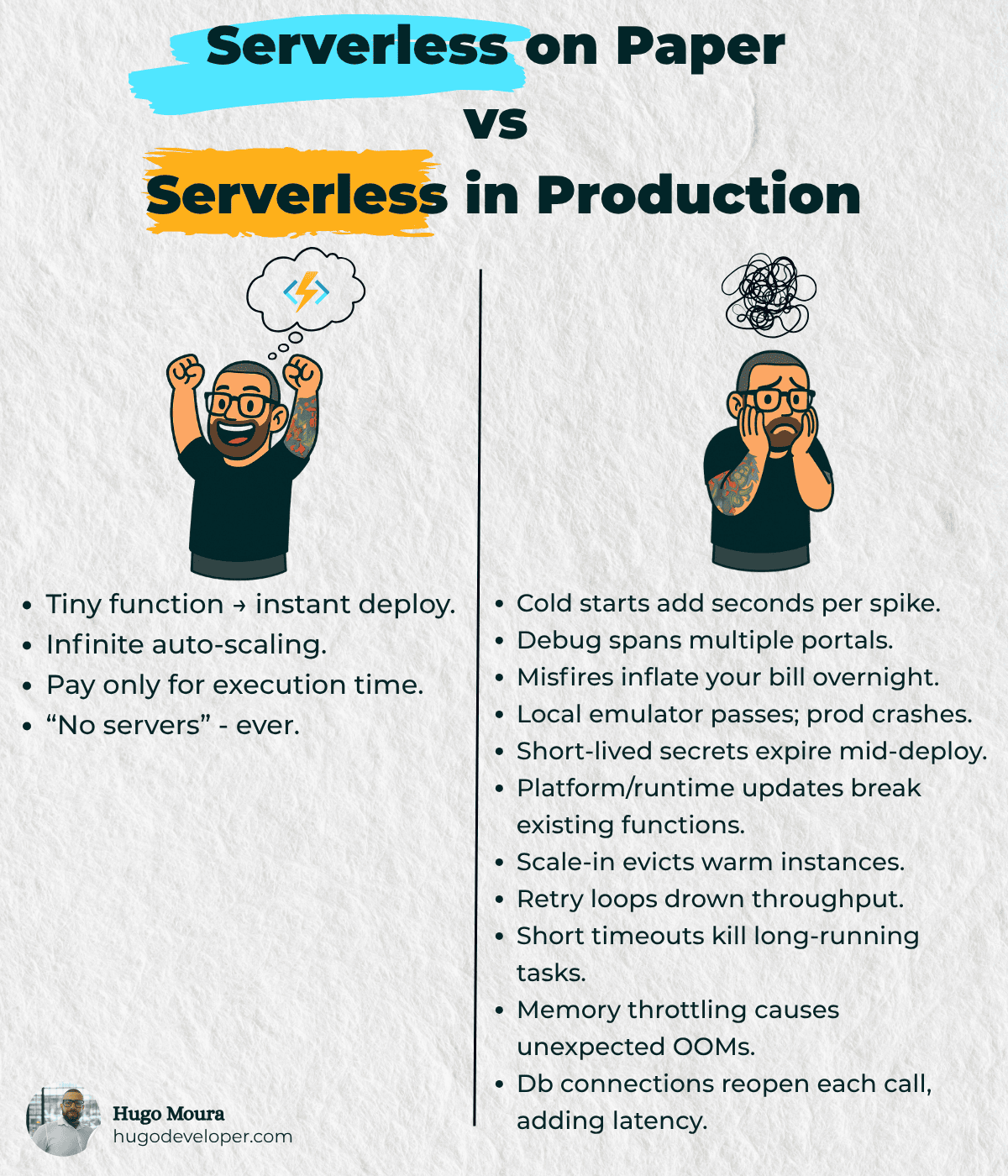

Serverless on Paper vs Serverless in Production

Over the past few years, microservices and event-driven architectures have become shorthand for modernization. In that landscape, AWS Lambda, Azure Functions, and Google Cloud Functions steal the spotlight: they let you fire off code on demand, wire events through queues, and "scale infinitely" without losing sleep over infrastructure.

If you work with CI/CD, DevOps, or have containerized a monolith, chances are you've heard (or pitched) the line: "just move to serverless—cost, scale, and deploy speed solved in one shot." Drop "serverless" into your microservices slide deck and voilà—at least on paper.

Reality tends to be harsher. Nearly every team that ships Functions to production discovers that cold starts, fragmented observability, and unpredictable costs can be as painful as running your cluster. I fell for the hype too: the proof-of-concept felt magical; the first traffic spike triggered PagerDuty nightmares.

This post is that straight-up hallway chat—no vendor buzzwords, yet filled with Google-friendly terms: "serverless pitfalls", "performance tuning", "cost optimization", "monitoring", "autoscaling". Let's break down, item by item, the challenges that arise when running functions in production and how they impact throughput, latency, and ROI.

Reality check

Hard-earned lessons from my deployments—issues nobody advertises, yet they're the first to bite when your Functions hit production.

Cold starts add seconds per spike

The platform must spin up a new container when no "warm" instance is available. During spikes, multiple cold starts accumulate; if your function runs within a VNet or loads heavy libraries, the latency jumps dramatically.

Debugging is scattered across multiple portals

Logs, metrics, and configuration settings are scattered across separate dashboards (Functions, Application Insights, Storage, API Management). You lose context cohesion, and your mean-time-to-innocence shoots up.

Misfires inflate your bill overnight

Automatic re-executions on transient failures treat your credit card as limitless: a combination of an improperly configured trigger and the default back-of policy can launch thousands of ghost runs while nobody's watching.

Works in the local emulator, crashes in prod

The local runtime rarely mirrors the provider’s sandbox. Native extensions, memory ceilings, and network conditions vary—what’s 100 % green in your dev container can devolve into a live stack trace.

Short-lived secrets expire mid-deploy

Keys rotated by vaults or managed identities can expire during long pipelines. A subsequent stage encounters an invalid token, the deployment fails, and you must manually revert the rollout.

Platform updates break existing functions

The provider upgrades the runtime or OS with minimal notice; bindings or libraries that rely on specific behavior abruptly cease to function.

Scale-in evicts warm instances

After a spike, the autoscaler kills idle containers. The next spike starts from cold again, creating a saw-tooth response-time pattern.

Retry loops drown throughput

Automatic retries on queues/topics snowball: each failure spawns multiple copies of the same message, clogging workers and delaying tasks that could finish on time.

Short timeouts kill long-running tasks

Defaults are often five minutes or less. Functions that invoke sluggish APIs, heavy transcoding, or ETL workloads can stall; they terminate at 99% completion and restart from scratch, consuming CPU cycles and budget.

Memory throttling causes surprise OOMs

When usage exceeds the “soft limit,” the runtime reduces the available memory instead of terminating the process outright. The function persists until a later allocation blows up—making the root cause difficult to pinpoint.

DB connections reopen every call

Functions are stateless by design; without manual connection pooling or an external startup routine, each run renegotiates TLS and database authentication. That adds latency and can overwhelm your data tier during peak traffic surges.

Is it worth it, then?

Absolutely—provided you know the terrain. Functions excel in event-driven workloads, bursty traffic, and one-off jobs, but they demand cold-start-aware design, centralized observability, and cost governance. In an upcoming article, I'll share mitigation tactics: pre-warming instances, connection pooling, smarter retries, and metrics that prove (or refute) the financial upside as volume grows.

Thanks for reading! If you know someone who’d find this valuable, please share it with them. Follow me on LinkedIn for more insights — see you next time!